APAI 2i12 - Techstack Transformed: There is no AI "bubble", only a permanent evolution

What every modern business must understand about the evolving AI tech

No “Zero-Shot” Without Exponential Data: Pretraining Concept

Frequency Determines Multimodal Model Performance

For all the AI “bubble” skeptics, the paper titled “No ‘Zero-Shot’ Without Exponential Data: Pretraining Concept Frequency Determines Multimodal Model Performance” that came out on April 4th, 2024 is like a silver tipped whip to help them tame the AI beast.

In case you want the very abbreviated TLDR, it’s this:

In short, the paper is saying, "Hey, AI might not be as smart at coming up with new stuff as we think. It might just be good at remembering and combining stuff it's already seen."Now, of course, the answer is a bit nuanced, but the two sides of the argument coin is:

We will run out of data at an exponential rate. And models will be “stuck” into 2023, or whatever is the last timestamp of the last time OpenAI or any other big player can muster to obtain new training data through public means (note, I did not say legal, grey-zone legal, spirit of the law legal or moral).

ORAccept that concepts such as data-drift and model-drift exist, and just as we MANDATE that our doctors, engineers, accountants, architects, etc keep up their professional knowledge and keep updating or they lose their licence, well, so we will accept that AI neural networks learn in a similar fashion of seeing new data often, therefore we must retrain AI models with new data on a regular basis.

The velocity of the “AI everywhere” movement will reach critical mass in 2024 and will not come down in 2025 or onwards. It will be like the coming of the automobile, where there was no “bubble” and nobody went back to horses.

Bet on the automobile, do not bet on the horses.

Table of Contents:

Lessons from Nvidia GTC

Recommender systems online/offline architecture

The new tech stack

Baking AI into the tech stack

The long term net winners in this AI tech evolution

Lessons from NVIDIA GTC

NVIDIA GTC 2024 is likely the most important AI tech conference of the year. It was early in the year, it was a very focused, distilled meeting of professionals and tech companies that are true movers and shakers of the industry. If you were not present at the Nvidia GTC in 2024, you are not an influential artificial intelligence player in 2024.

Were you at the Nvidia GTC? Did you meet anybody interesting in line for the many excellent talks? At one of the high quality socials organized by the many awesome players in the field? Let us know, share the experience…

Nvidia GTC provided a lot of lessons, and I will only focus on the two needed for this article:

2024 is all all about reasoning

hardware is the heart of all this AI revolution - data centers and IoT will boom

Foundational models are very good already, yes there’s room for improvement, but if you take a car and give it 500hp, but your transmission is bad, who cares? what about the tires? what about brakes? what about passenger comfort? etc…

So, the message is loud and clear - we currently do not deploy AI models properly, we must build a tech stack on top of these foundational models. This tech infrastructure will be a comples multi-layered set of systems that use contextual data (vectorstores), fast specialized AI models, middleware Ai specialized models, good old software development and a significantly improved UX. (By UX, think user experience, DO NOT THINK user interfaces… this is where UX professionals pull their hairs out to explain why UX is vastly different from designing a UI, and how much more complex UX is for modern products).

Secondly, the message is loud and clear that 2 things are needed to better support proper deployment of AI: specialized hardware and specialized software for this hardware. Enter AI data centers and the fabulous world of IoT. Yes, they are different things, but they all must obey the world of physics. IREN (Iris Energy) had an excellent talk at Nvidia GTC on how all this AI stuff is realistically constrained by our current hardware, our current energy consumption, delivery of this energy and future-planning for this energy usage for AI purposes. This means that data centers that use renewable energy and are purpose built for GPU dense workloads is key to our society’s expansion into a scalable and sustainable AI powered revolution.

IoT (internet of things) is equally physically limited by wear-tear, power consumption, dust, UV, environmental strain, manufacturing limitations, etc. However, IoT is key in bringing proper automation to our connected world. IoT will deliver the majority of new data that AI systems use so they adapt to our changing world. Right now we must be very purposeful of cleaning up human transactional data, prepping data, feeding it into training sets so we can build foundational models. IoT can generate very “clean” passive data to help massively with our context when we want to ask a question.

Recommender systems Online/Offline Architecture

The internet offers a lot of categories of experiences (information, entertainment, computing, social, etc… ) While a lot of the info is free, the backbone powering all this is still the commercial side of things. With so many companies trying to convince you to give them attention, money, or both, how do we automate this process, have some control over this process, be focused, contextual and relevant? Nobody wants to hear about snow shovels if they live in California and are looking for backyard pool reviews…

Enter Recommender systems.

Recommender systems currently rule the commercial side of the internet and will only get more sophisticated and relevant. The one downside is they are very expensive for the moment, however that is changing.

Want an example? Do you ever use Amazon shopping? Do you ever browse Netflix’s recommended movie list? You ever shop on Etsy?

Pinterest, Instagram, Facebook, etc.. all social networks have massively expensive and sophisticated recommender systems in place.

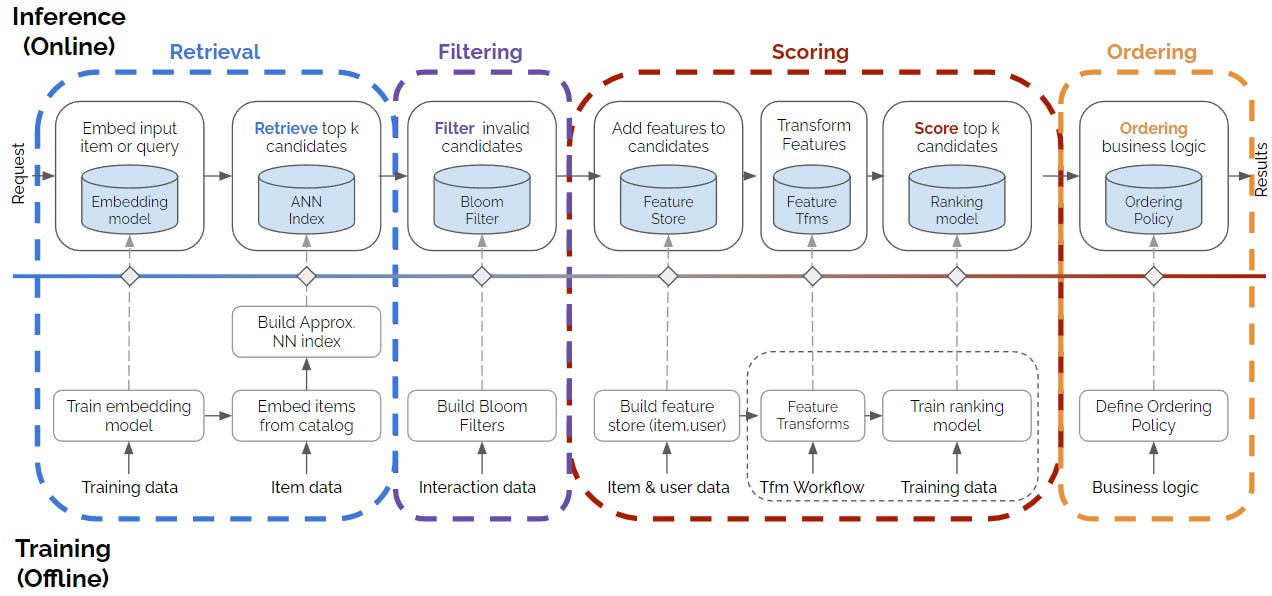

Recomender systems have the concept of online & offline pipelines.

Source: Article on Recommender systems by Even Odridge and Karl Byleen-Higley.

Online is the part people see and interact with in realtime. People look for info on products, and a recommender system shows pulls similar data (retrieval), then filters out invalid candidates data (e.g. out-of-stock items), then scores the remaining list using a weighted system that is relevant to the user (e.g. showing local products by small businesses vs mass produced), finally orders that list (remember when you see Pinterest and there’s some items at top, but you can scroll to see more? that’s the “ordering” part) - that all happens ultra fast.

In the background there’s a massive system that keeps retraining the AI models to keep adjusting the consumer usage patterns. Companies like Pinterest learned they needed to embrace AI and retraining of AI as they (Pinterest) adapted to their user’s behaviour and demands.

Pinterest had a very interesting talk and relevant at NVIDIA GTC where they described their organic evolution from CPU based recommender systems to GPU based recommender systems. Pinterest’s talk and strategic move should be strategic “must view” for all companies to understand from a grass-roots POV how to make strategic AI investments - I’m looking at you giant consulting companies claiming depth and breadth around AI.

Pinterest has been doing this for years. They are the real deal.

Nordstrom and Estee Lauder also have very credible AI practices and I highly recommend attending tech conferences where any of them send their staff - from software developers to executives. Yes, their executives actually know and understand how to use AI.

What does Pinterest, Nordstrom and Estee Lauder have in common? They have baked AI into their digital infrastructure and most importantly into the (UX) user experience.

The new tech stack

The new tech stack will contain online/offline systems for all AI based products and services. The need to constantly reinforce AI responses and behaviour with new and relevant data will dictate that whoever adapts will survive and thrive in this new era of AI dominance.

The new tech stack will use AI as new API calls for decision making. It is just a side step, an evolutionary step. All the existing microservices need to do is use an AI model of some kind to return a response to an API call. And vectorstores will become our new friends for NLP systems and contextual data retrieval.

Baking AI into everything

The Google Product manager (former Netflix) did a demo of a poor man’s recommender system. He just asked Google’s Gemini to give him a music recomendation based on 5 random questions. The result was not impressive, but it proved the point of what a recommender system is.

And then, he echoed every tech persona’s POV who had credible experience: we must bake AI into the UX, into the middleware, into our backend systems.

Today’s smart UI is responsive and reactive to the many tablets and screensizes we have. AI powered smart UI will evolve to contain significantly more complexity.

UX will change based on user interaction, where simple UI elements, more complex or full on detail info will be brought on by AI classifier models that only need to make fairly simple decisions.

Context engines will use a confidence score to decide where to go fetch data - from a vectorstore, from a general datasource or go engage another AI.

And finally, all this will be governed in an offline mode by other AI tools that analyze the behaviour of the realtime AI decisions and user interactions, and then push new rules, context and reasoning guardrails to the AI that has now been baked into our tech stack.

Long Term net winners in the AI evolution

There’s a whole industry waiting to be developed in next 10 years, all feeding AI powered systems with various levels of data, that will either submit context (ie, high quality data) or will inform the system when it needs to be retrained.

GPU cluster farms will become part of the fabric of our physical layer, no longer shunned in corners of bitcoin mining, but an integral part of our infrastructure.

So, think, “Energy”.

Buy stock into Energy companies.

They are the real winners.

Do you know of any particular companies that will be part of this net “winner” group in the AI evolutionary tide? Leave a comment or DM me, I’d love to hear from you.

“GPU cluster farms will become part of the fabric of our physical layer, no longer shunned in corners of bitcoin mining, but an integral part of our infrastructure.”

Do you mean what Altman is calling Compute? You say ‘energy’, is there no established name for it yet?

How viable do you think dePIN becomes? (Decentralized Physical Infrastructure Networks) community or regional AI that draws from local idle compute? Would the corporate world have any pressures to go this direction with it, or will they continue to try and centralize?

The recent paper highlights that AI models heavily rely on pre-existing data, challenging the belief in their innovative capabilities. It's crucial to acknowledge data and model drift, necessitating regular updates. This evolution in AI isn't a bubble—it's akin to the irreversible shift from horses to automobiles.